Mistral AI Instruct v0.1 model evaluation in real world use cases

Overview

Since the first model from Mistral AI was released, a lot happened. Less than 7 days after the release, the team at Alignment Lab even fine tuned the model with the OpenOrca dataset and shared an hybrid Mistral Orca model.

A lot of experiments are currently being run by the community as Mistral AI released the model weights under a permissive license (Apache 2.0).

I ran some real-world tests on the model to evaluate its performances. I took gpt-3.5-turbo as a baseline.

Evaluation requirements

I focused on evaluating the model with the following key requirements:

- Restrict tests using a quantized version of the instruct model so I can run inference on Apple Silicon MacBook Pro M1 without resorting to purchase GPU Cloud services

- Evaluate parsing and information extraction performances

- Evaluate summarization capabilities

- Evaluate hallucinations

This article is not a replacement for standard llm benchmarks, its goal is to provide insights on where the model capabilities could be the best fit for your application.

I ran inference using the llama.cpp library and PyLLMCore.

Results and interpretation : Quantization has significant impacts

The results shared here should be interpreted with the fact that I am using a quantized version (Q4_K_M) that yields lower quality outputs.

Quantized version of Mistral AI Instruct v0.1

I used the Q4_K_M version from The Bloke repository. That means the the original weights (fp16) were quantized to 4 bits but it enabled a maximum RAM usage limited to 6.87 GB.

Parsing and extracting unstructured content tasks

This is where Mistral AI excels.

When paired with the grammar sampling constraints from the llama.cpp and the dynamic schema generation, parsing is really simplified.

We provide a JSON Schema or a Python data class and raw text without any prompt and infer a structure matching what we intended.

For example, we feed the raw text taken from the official release announcement:

Mistral AI team is proud to release Mistral 7B, the most powerful language

model for its size to date.

Mistral 7B in short

Mistral 7B is a 7.3B parameter model that:

Outperforms Llama 2 13B on all benchmarks

Outperforms Llama 1 34B on many benchmarks

Approaches CodeLlama 7B performance on code, while remaining good at English

tasks

Uses Grouped-query attention (GQA) for faster inference

Uses Sliding Window Attention (SWA) to handle longer sequences at smaller cost

We’re releasing Mistral 7B under the Apache 2.0 license, it can be used

without restrictions.

Download it and use it anywhere (including locally) with our reference

implementation

Deploy it on any cloud (AWS/GCP/Azure), using vLLM inference server

and skypilot

Use it on HuggingFace

Mistral 7B is easy to fine-tune on any task. As a demonstration, we’re

providing a model fine-tuned for chat, which outperforms Llama 2 13B chat.When paired with a fairly generic schema like the following:

@dataclass

class Content:

content_classification: str

entity: str

key_insights: List[str]

call_to_action: List[str]Running inference will yield the following:

Content(

content_classification='News',

entity='Mistral AI',

key_insights=[

"""Mistral 7B is a 7.3B parameter model that outperforms Llama 2 13B

on all benchmarks and outperforms Llama 1 34B on many benchmarks.""",

"""Mistral 7B uses Grouped-query attention (GQA) for faster inference

and Sliding Window Attention (SWA) to handle longer sequences at

smaller cost."""

],

call_to_action=[

'Download Mistral 7B',

'Use Mistral 7B on Hugging Face',

'Deploy Mistral 7B on any cloud',

'Fine-tune Mistral 7B on any task'

]

)Similar results were obtained with GPT-3.5.

What's interesting is to further specify what we need by refining the schema.

- Side note: A few years ago, I studied how we can express implementation expectations when writing a schema and gave a talk when data classes were integrated in Python 3.7. See timecode 17:35 of Unexpected Dataclasses

When refining the schema to extract information:

@dataclass

class Content:

classification: str

entity: str

top_3_key_insights: List[str]

call_to_action: List[str]

full_text: str

summary_in_50_words: strWe get approximately what we asked:

Content(

classification='News',

entity='Mistral AI',

top_3_key_insights=[

"""Mistral 7B is a 7.3B parameter model that outperforms Llama 2 13B

on all benchmarks.""",

"""It uses Grouped-query attention (GQA) for faster inference and

Sliding Window Attention (SWA) to handle longer sequences at smaller

cost.""",

"""Mistral 7B is easy to fine-tune on any task, as demonstrated by a

model fine-tuned for chat that outperforms Llama 2 13B chat."""

],

call_to_action=[

'Download Mistral 7B',

'Fine-tune on Hugging Face',

'Deploy on cloud'

],

full_text="""Mistral AI team is proud to release Mistral 7B...""",

summary_in_50_words="""Mistral AI releases Mistral 7B, the most

powerful language model for its size to date.

It outperforms Llama 2 13B on all benchmarks and is easy to

fine-tune on any task."""

)Parsing and fixing text output from OCR

One of the interesting use cases for local language models is the ability to interpret damaged written content such as output from OCR.

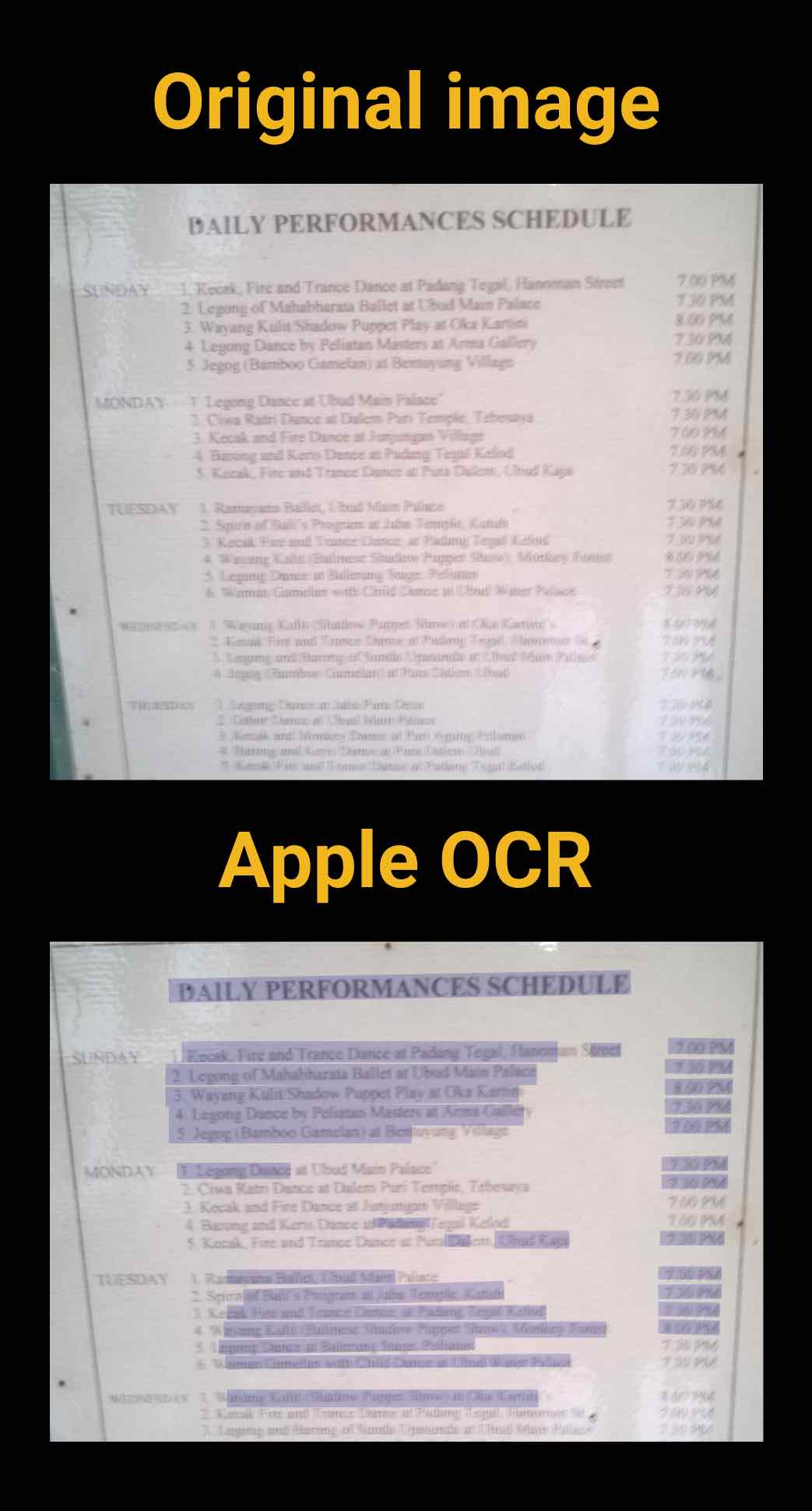

Fixing OCR outputs with LLM Mistral Instruct

I used a blurry image to generate OCR defects and inconsistencies, let's try to salvage what we can:

I know the data is messy so my intent is to get an idea of what the image is and extract what we can.

OCR_OUTPUT:

"""

DAILY PERFORMANCES SCHEDULE

Kocak. Fire and Trance Dance at Padang Tegal, Hanon

2 Legong of Mahabharata Ballet at Ubad Main Palace

3. Wayang Kulit Shadow Puppet Play at Ola Kartin

4. Legong Dance by Peliatan Masters at Arina Galler

5. Jegog (Bamboo Gamelan) at Ben

1 Legong Dance

treet

100 PM

130 PM

8 00 PM

130 PM

7.00 PM

130 PM

1 30 Pu

Dealinings

Dall

Thad Kaja

navama Baalllen., Uhud Mais

of Batrs Program at Jain Temple Kanui call Fire and Trance Dance, at Padang: Tegal Kello mane Kalia Ballitese Sitadow Paper Show Miney Forest egome Dance an Billerune Stue Petrats

aman Gamelan with Child Dame all Hut we aer Balliss

lavang Fallo (Situdiov Punnes Sitew) at cOla Karin

130 %

130 D

150PM

1 30 90

2. 20 MU

3.

"""

> Reconstruct content from OCR_OUTPUT.The Mistral Instruct answer is:

The provided text appears to be a schedule of daily performances for various

cultural events in Indonesia. The events include fire and trance dance,

wayang kulit shadow puppet play, legong dance, jegog bamboo gamelan

performance, and more. Each event has its own time slot and location.

It seems like these events are part of a larger program or festival, as

there is information about the venue and performers for each event.The answer provided by GPT-3.5 with the same prompt generated hallucinations:

DAILY PERFORMANCES SCHEDULE

1. Kocak Fire and Trance Dance at Padang Tegal, Hanon - 2:00 PM

2. Legong of Mahabharata Ballet at Ubad Main Palace - 1:30 PM

3. Wayang Kulit Shadow Puppet Play at Ola Kartin - 8:00 PM

4. Legong Dance by Peliatan Masters at Arina Galler - 1:30 PM

5. Jegog (Bamboo Gamelan) at Ben - 7:00 PM

6. Legong Dance at Tree Street - 1:30 PM

7. Batrs Program at Jain Temple Kanui - 1:30 PM

8. Fire and Trance Dance at Padang Tegal Kello - 1:30 PM

9. Kalia Ballitese Sitadow Paper Show at Miney Forest - 1:30 PM

10. Egome Dance and Billerune Stue Petrats at Aman Gamelan - 1:30 PM

11. Child Dame at Hut we aer Balliss - 1:50 PM

12. Lavang Fallo at Situdiov Punnes Sitew - 1:30 PM

13. Ola Karin - 1:30 PM

14. Dall Thad Kaja - 1:30 PM

15. Navama Baalllen - 2:20 PM

Note: The times mentioned are the scheduled performance times.It is very interesting to see how GPT-3.5 tried to generate an answer following too closely my prompt. However, when the prompt is changed to Describe the content from OCR_OUTPUT the answer was better:

Mistral Instruct v0.1:

The OCR_OUTPUT contains a schedule of daily performances for various

cultural events in Indonesia.

The schedule includes information about the type of performance, the

location, and the time of each event.

Some of the performances listed include Legong Dance by Peliatan Masters at

Arina Galler, Jegog (Bamboo Gamelan) at Ben, and Wayang Kulit Shadow

Puppet Play at Ola Kartin.

Each performance is described in detail, including information about

the performers, the instruments used, and any other relevant details.

Overall, the OCR_OUTPUT provides a comprehensive guide to cultural events

happening in Indonesia on a daily basis.GPT-3.5:

The OCR_OUTPUT appears to be a schedule of daily performances. It includes

various dance performances such as Kocak, Fire and Trance Dance at Padang

Tegal, Hanon, Legong of Mahabharata Ballet at Ubad Main Palace, Legong Dance

by Peliatan Masters at Arina Galler, and Jegog (Bamboo Gamelan) at Ben.

There is also a mention of Wayang Kulit Shadow Puppet Play at Ola Kartin.

The schedule includes specific timings for each performance, such as 1:00 PM,

1:30 PM, and 8:00 PM.

There are also some unclear entries and incomplete information in the OCR_OUTPUT.Summarization tasks

Mistral AI Instruct is quite capable on summarization tasks in zero-shot configurations.

After running several attempts to make the Chain of Density prompting (COD) work, I came to the conclusion that it will not yield usable results.

It may comes from the quantization or the ability to strictly follow complex prompts. Fine tuning may help - I'll try the Mistral Orca version to compare results. Note that GPT-3.5 didn't perform well either using this prompting technique.

To capture the most value out of Mistral Instruct, I would advice to use simple and short prompts for summarization tasks.

Evaluation of hallucinations in situations where no context is provided

Creating believable but incorrect information is something we will have to deal with for some time when using LLM (which are auto regressive).

Many techniques were published with the objective to reduce hallucinations (Chain of Verification (CoVe), Zero-resource hallucination prevention for large language models, ...). But, these techniques come with the following hypotheses:

- The ability for the LLM to strictly follow instructions (which may requires more fine tuning for Mistral Instruct)

- The ability to clearly identify inconsistencies

I came across numerous times where both GPT-3.5 and Mistral Instruct were unable to identify correctly inconsistencies. I think this may be due to the internal architecture and the way tokens are sampled (I'll have to dig deeper on that topic).

For example (as per Chain of Verification (CoVe)):

# GPT-3.5

> Name some french politicians born in Paris

1. Emmanuel Macron - President of France

2. Anne Hidalgo - Mayor of Paris

3. Jean-Luc Mélenchon - Leader of La France Insoumise party

4. Valérie Pécresse - President of the Île-de-France region

5. Rachida Dati - Member of the European Parliament and former Minister of Justice

----

> Was Emmanuel Macron born in Paris, France ?

Yes, Emmanuel Macron was born in Amiens, France.

----

> Based on the previous answers, name some french politicians born in Paris

Based on the previous answers, some French politicians born in Paris are:

1. Anne Hidalgo - Mayor of Paris

2. Valérie Pécresse - President of the Île-de-France region

3. Rachida Dati - Member of the European Parliament and former Minister of Justice

4. Ségolène Royal - Former Minister of Ecology, Sustainable Development and Energy

5. Cécile Duflot - Former Minister of Housing and Territorial EqualityGPT-3.5 caught the inconsistency and revised the answer.

Trying the same with Mistral Instruct:

# Mistral Instruct v0.1

> Name some french politicians born in Paris

1. Jacques Chirac - former President of France, born in Paris.

2. Nicolas Sarkozy - former President of France, born in Paris.

3. François Hollande - former President of France, born in Paris.

4. Jean-Paul II - former Pope, born in Paris.

5. Georges Pompidou - former President of France, born in Paris.

----

> Was François Hollande born in Paris, France ?

Answer: False

----

> Based on the previous answers, name some french politicians born in Paris

1. Jacques Chirac - former President of France, born in Paris.

2. Nicolas Sarkozy - former President of France, born in Paris.

3. François Hollande - former President of France, born in Tours, not Paris.

4. Jean-Paul II - former Pope, born in Paris.

5. Georges Pompidou - former President of France, born in Paris.Yet, François Hollande was born in Rouen.

Mistral Instruct, like other LLM generates hallucinations (like Jean-Paul II being born in France), GPT-3.5 might have done better maybe because of its size (more data has been compressed into its weights).

So as a general rule:

Don't use LLM to ask for information without a retrieval system with a reliable source

Final thoughts

I find the Mistral AI very capable for its size and being released under Apache 2.0 license makes it a good fit for a lot of applications. I found that the Instruct model does not strictly follow instructions. If your application has this requirement, you'll have to consider fine tuning.

One of the best use case I can think of when I ran my tests is to integrate the model in Knowledge management system to index and process documents.

As you can deploy the model on cheap hardware while maintaining privacy and confidentiality, this opens up a wide range of opportunities.

Read more...

You might be interested in previous articles: